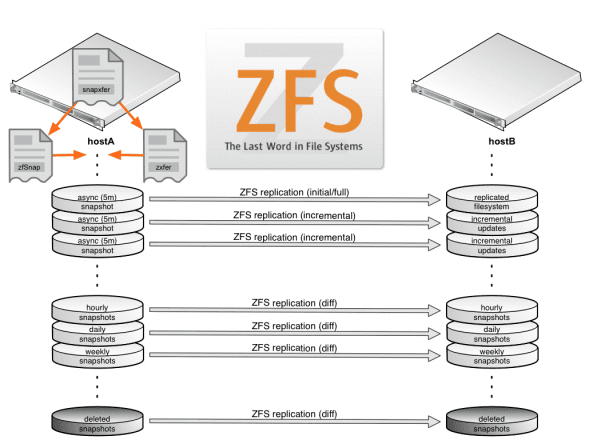

I frequently have the need to create ZFS snapshots and replicate them from one host to another for backup and disaster recovery purposes. There are many blog posts and articles that present custom scripts using zfs send/recv to automate this task and they all work to some extent. I have tried most of them but recently settled on two in particular that I’ve found to be robust enough to meet all of my needs.

I have written a simplistic shell wrapper I call snapxfer around zfSnap by Aldis Berjoza, and zxfer by Ivan Dreckman. The snapxfer script controls which ZFS filesystems will have snapshots taken, the snapshot frequency, and where the snapshots will be replicated to, while zfSnap and zxfer handle the heavy lifting. Both of these tools are in wide production use and seem fairly well maintained.

Snapshot Rotation

The snapxfer script takes a single argument that defines the snapshot interval. It currently accepts four interval types:

- async – will create a snapshot meant for asynchronous disaster recovery purposes and send it to a remote host, with an expiration of one hour

- hourly – will create a snapshot with an expiration of one day

- daily – will create a snapshot with an expiration of one week

- weekly – will create a snapshot with an expiration of one month

The snapxfer script is run via cron on the following schedule:

- An async snapshot is made every 5 minutes and sent to the secondary host

- An hourly snapshot is made every one hour and one minute

- A daily snapshot is made every day at 12:02am

- A weekly snapshot is made every Sunday at 12:03am

The schedule I use is slightly staggered as a precaution to avoid any possible disk contention or unnecessary system load. Instead of writing complex rotation rules, this schedule will ensure a range of snapshots exists at any given time. zfSnap takes care of expiring the snapshots based on a time-to-live (TTL) value embedded in the snapshot name. As configured, snapxfer will call on zfSnap to expire the snapshots as part of the daily interval.

Remote Replication

While zfSnap handles snapshot creation, zxfer is responsible for replicating filesystem and snapshot changes to a remote host. As configured, all filesystems defined in snapxfer, along with any snapshots created by zfSnap (including hourly/daily/weekly), will be replicated to the secondary host every five minutes. When zfSnap expires snapshots on the primary, the same snapshots will also be expired on the secondary during the next replication run.

The snapxfer script is setup with the assumption that you have established a passwordless ssh key between the root user on hostA and hostB. With modification, you could instead use sudo or create a separate user that has been granted only the zfs acl permissions necessary to perform the task.

Failover and Recovery

Checking for failover conditions within snapxfer is still a TODO item, and recovery is still a manual process at the moment. A function can be added to snapxfer that looks at a given failover mechanism’s state and prevents snapshots from being created or replicated. Automated recovery is also a TODO item, which would help facilitate sending initial/incremental snapshots from secondary to primary with as little downtime as possible. In my particular use case I’m able to schedule reasonable downtime windows if a disaster of this sort occurs.

Manual Recovery

In the event that the secondary has taken over for the primary, the manual steps for reverting back to the primary would be as follows:

- On the primary, rename or destroy the stale filesystems

- On the secondary, shutdown all services that access the filesystems in question

- On the secondary, create a snapshot of each filesystem (e.g. zfs snapshot mypool/data@migrate)

- On the secondary, send the snapshots to the primary (e.g. zfs send -R mypool/data@migrate | ssh primary “zfs recv -vFd mypool”)

The primary should now have up-to-date filesystems and services can be restarted there.

snapxfer script

This script would be located somewhere on hostA.

#!/bin/sh

# Local ZFS filesystems that will have snapshot

# logic applied

DATASETS="mypool/data \

mypool/media \

mypool/cloud"

# Destination host for snapshot replication

DHOST=hostB

# Output logfile

LOGFILE=/var/log/snapxfer.log

# Tools that help implement snapshot logic and transfer

ZSNAP=/opt/local/sbin/zfSnap

ZXFER=/opt/local/sbin/zxfer

######################################################################

# Main logic #

######################################################################

interval=$1

usage ()

{

if [ "X${interval}" = "X" ]; then

echo ""

echo "Usage: $0 <interval> (async|hourly|daily|weekly|purge)"

echo ""

echo "* Asynchronous DR snapshots are kept for 1 hour"

echo "* Hourly snapshots are kept for 1 day"

echo "* Daily snapshots are kept for one week"

echo "* Weekly snapshots are kept for one month"

echo ""

exit 1

fi

}

if [ ! -f $LOGFILE ]; then

touch $LOGFILE

fi

case ${interval} in

'async')

# take snapshots for asynchronous DR purposes

for dset in $DATASETS

do

$ZSNAP -v -s -S -a 1h $dset >> $LOGFILE

done

# send snapshots to failover host

for dset in $DATASETS

do

$ZXFER -dFv -T root@$DHOST -N $dset zroot >> $LOGFILE

done

echo "" >> $LOGFILE

;;

'hourly')

# take snapshots, keep for one day

for dset in $DATASETS

do

$ZSNAP -s -S -a 1d $dset >> $LOGFILE

done

echo "" >> $LOGFILE

;;

'daily')

# take snapshots, keep for one week

for dset in $DATASETS

do

$ZSNAP -s -S -a 1w $dset >> $LOGFILE

done

# purge snapshots according to TTL

$ZSNAP -s -S -d >> $LOGFILE

echo "" >> $LOGFILE

;;

'weekly')

# take snapshots, keep for one month

for dset in $DATASETS

do

$ZSNAP -s -S -a 1m $dset >> $LOGFILE

done

echo "" >> $LOGFILE

;;

'purge')

# purge snapshots according to TTL

$ZSNAP -v -s -S -d

;;

*)

usage

;;

esac

Disclaimer

I have tested this solution with FreeBSD 9 and recent SmartOS releases and can only say that it works for me. I make no guarantees of any kind that it will work for you, but hope that it does and that you find it useful.